Just as the U.S. doubles down on AI investments and China races ahead with full-stack AI ecosystems, the European Union has chosen a different focus: regulation first, competitiveness later.

Despite urgent calls from over 40 tech CEOs - including leaders at Meta, Mistral, and Airbus — to pause and reconsider the timeline, the EU Commission has made it clear:

“There is no stop the clock. No grace period. No pause.”

The EU AI Act will go into effect as planned.

That means:

- August 2025: General-purpose AI models must comply

- August 2026: High-risk AI systems (HR, law, finance, education, health) face enforcement

This is Europe’s "GDPR moment for AI" - but deeper, more technical, and harder to implement.

🇪🇺 The Missed Opportunity

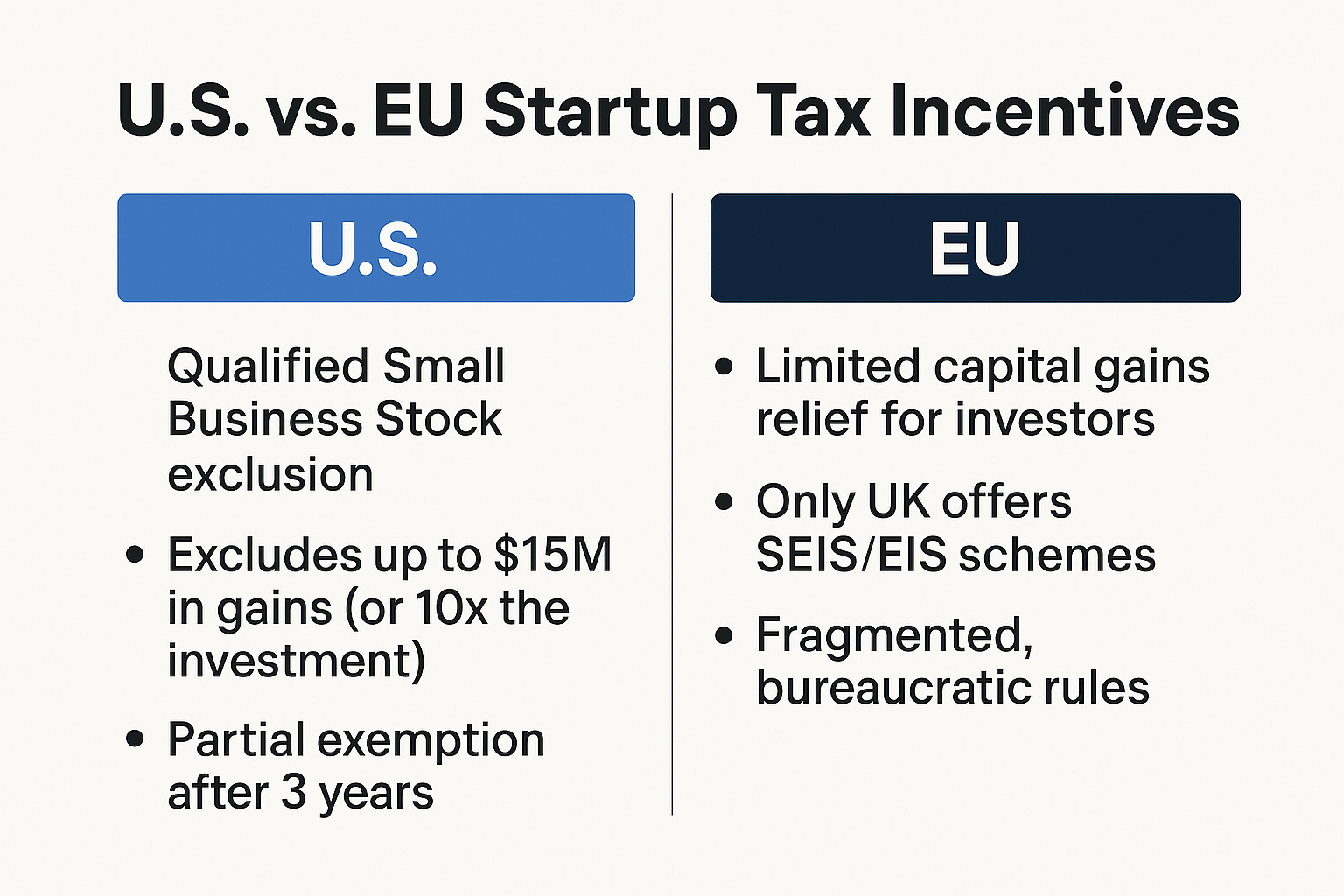

Europe is still trying to catch up in AI. While the U.S. builds new AI startups daily and VC money flows into AI-native infrastructure, the EU still has:

- No global AI platform champions

- Limited access to large foundational models

- Fragmented digital markets

- Weak incentives for AI job creation

So why the rush to regulate before there’s even a thriving market to regulate?

Startups need customers, funding, and speed - not red tape that slows innovation while the rest of the world accelerates.

Still, Compliance Is Coming. What Should Startups Do?

If you’re an AI startup in Switzerland or the EU, you can’t ignore the EU AI Act. Even if you’re small. Even if you don’t operate in the EU yet. The rules have extraterritorial reach.

Here’s a practical checklist to start preparing today:

✅ 1. Know your risk category

- Are you building on or providing a foundation model?

- Are you serving users in law, HR, healthcare, education, or finance?

→ You may be considered high-risk.

✅ 2. Audit your AI stack

- Which models do you use?

- What data did you train or fine-tune on?

- What decisions are being made by your AI?

✅ 3. Document everything

- Biases, risks, model limitations

- Energy usage, input/output behavior

- Human intervention options

✅ 4. Add transparency

- Let users know how answers are generated

- Label outputs clearly

- Offer lawyer/human verification when needed

✅ 5. Build human oversight

- Allow escalation to a person

- Review sensitive decisions

- Log usage, errors, and incidents

✅ 6. Prepare for conformity assessments (2026)

Especially if you’re in a high-risk category. These will be mandatory.

The Lawise.ai Perspective

At Lawise.ai, we’ve already begun preparing:

- Human-in-the-loop verification (CHF 20 verified answers)

- Transparent answer labeling

- GDPR-compliant data flows

- Risk documentation and incident tracking

We don’t agree with the timing of this regulation — but we do believe trust will be the ultimate differentiator in AI.

Final Thought

If Europe truly wants to lead in AI, it must balance protection with promotion. The EU AI Act might be necessary — but it shouldn’t come at the cost of competitiveness, speed, and growth.

Let’s build AI businesses that are both safe and scalable — and challenge policymakers to support startups with the same urgency they bring to regulation.

.jpg)